CWE View for Benchmarking¶

Illustrative Scenario¶

Per Top25 Dataset, some CVEs have more than one CWE.

How do we score this scenario?

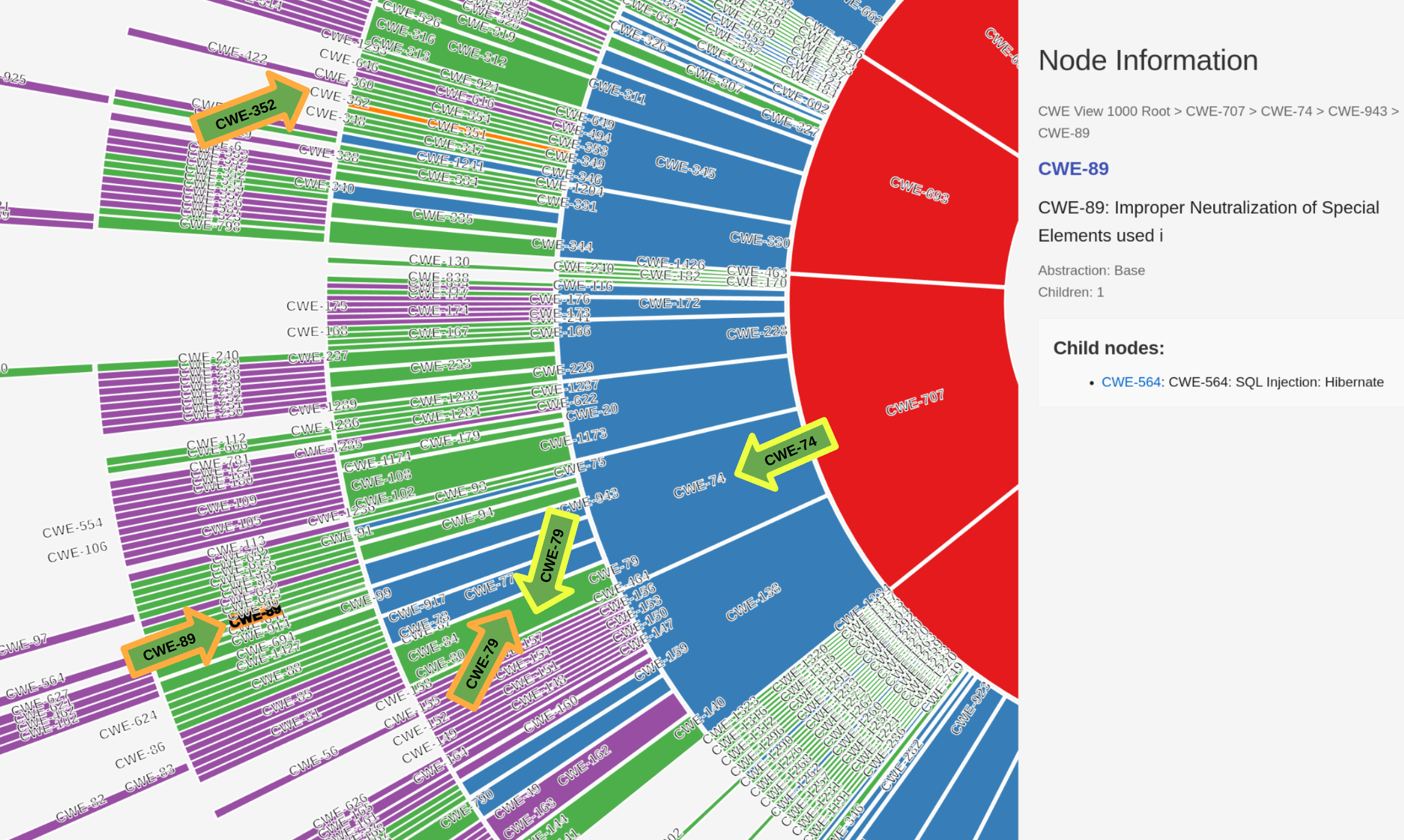

- Benchmark: CWE-79, CWE-74 (yellow arrows)

- Benchmark is the known-good CWE mappings for a given CVE.

- Prediction: CWE-79, CWE-89, CWE-352 (orange arrows)

- Prediction is the assigned CWE mappings for a given CVE from a tool/service.

- ✅ CWE-79 was correctly assigned

- ❓ CWE-89 was assigned. This is more specific than CWE-74

- Is this wrong or more right?

- Does this get partial credit or no credit?

- ❌ CWE-352 was incorrectly assigned

- Is there a penalty for a wrong guess?

Similarly, how would we score it for

- Benchmark: CWE-79, CWE-89, CWE-352

- Prediction: CWE-79, CWE-74

Question

Do we reward proximity? i.e. close but not exactly the Benchmark CWE(s).

What View determines proximity? e.g. View-1003, View-1000.

Do we penalize wrong? i.e. far from any Benchmark CWE(s).

Are classification errors in the upper levels of the hierarchy (e.g. when wrongly classifying a CWE in the wrong pillar) more severe than those in deeper levels (e.g. when classifying a CVE from Base to Variant)

Do we reward specificity? i.e. for 2 CWEs in proximity to the Benchmark CWE(s), do we reward a more specific CWE(s) versus a more general CWE(s)?

Benchmark CWE View Selection¶

Info

The most specific accurate CWE should be assigned to a CVE i.e. not limited by views which contain a subset of CWEs e.g. View-1003.

- Therefore, the benchmark should support all CWEs

Tip

Research View (View-1000) will be used as the CWE View to base benchmark scoring on.

See details on different views and why Research View (View-1000) is the best view to use.

Benchmark Scoring Algorithm¶

This section is an overview of different CWE benchmarking scoring approaches.

Data Points¶

Info

A CVE can have one or more CWEs i.e. it's a multi-label classification problem.

MITRE CWE list is a rich document containing detailed information on CWEs (~2800 pages).

The RelatedNatureEnumerations form a (view-dependent e.g. CWE-1000) Directed Acyclic Graph (DAG)

- 1309 ChildOf/ParentOf

- 141 CanPrecede/CanFollow

- 13 Requires/RequiredBy

CVE info (Description and References) may lead to some ambiguity as to the best CWE.

Scoring System: Overview¶

The goal is to assign a numeric score that:

- Rewards exact matches highly.

- Rewards partial matches based on hierarchical proximity (parent-child relationships).

- Penalizes wrong assignments.

- Provides a standardized range (e.g. 0 to 1) for easy comparison.

- Is based on the CWE hierarchy.

- Reinforces good mapping behavior.

Definitions & Assumptions¶

- Ground Truth (Benchmark): One or more known-good CWEs per CVE.

- Prediction: One or more CWEs assigned by the tested solution.

- Hierarchy: Relationships defined by MITRE’s CWE hierarchy (RelatedNatureEnumerations).

- Proximity: Measured as shortest hierarchical path (edge distance) between two CWEs for a given View.

- If there is more than one path, the Primary Path is used.

Interesting Cases¶

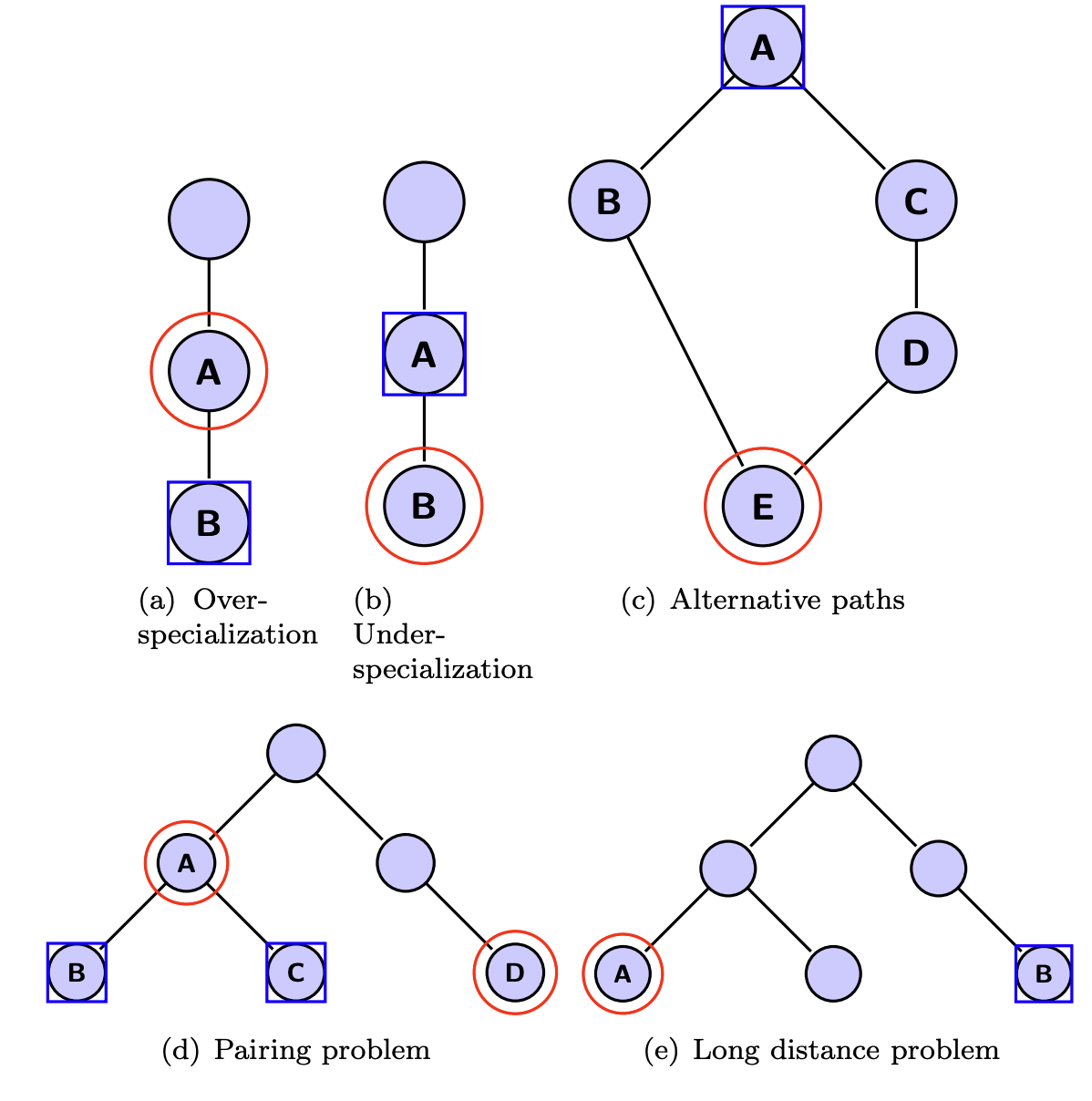

Evaluation Measures for Hierarchical Classification: a unified view and novel approaches, June 2013, presents the interesting cases when evaluating hierarchical classifiers.

Scoring Approaches¶

The following Scoring Approaches are detailed:

- Path Based using CWE graph distance based on ChildOf

- Path based Proposal

- See Critique

- Hierarchical Classification Standards

Tip

Hierarchical Classification Standards look the most promising.